Cambridge Analytica is more than a data breach – it's a human rights problem

Courtesy of Lorna McGregor, University of Essex

The closure of Cambridge Analytica following the revelations about its use of Facebook data not only points to the need for greater business regulation. It also highlights the importance of thinking more about how people can bring human rights claims in cases like these.

The closure of Cambridge Analytica following the revelations about its use of Facebook data not only points to the need for greater business regulation. It also highlights the importance of thinking more about how people can bring human rights claims in cases like these.

The right to a remedy is often overlooked. That’s at least in part because it’s difficult to understand the extent of the harm caused by incidents like the kind involving Facebook and Cambridge Analytica. Data breaches are fairly common events, so companies can downplay their seriousness. Consumers, for their part, may have started to see such breaches as normal too. The harm is not immediately tangible and therefore can feel less real.

However, in incidents like these, the implications for human rights are serious and pervasive. The harm caused by data breaches is often framed as “just” privacy, as if a breach of privacy is not important. But it’s hard to see how accessing and sharing people’s private thoughts and opinions without meaningful consent isn’t a very serious act.

It’s also important to remember that privacy acts as a gatekeeper to other rights. Users’ rights to freedom of thought and opinion and assembly and association are put at risk by allowing their private information to be used against them in an effort to influence their views – including their political opinions. This has serious implications for the functioning of democracy.

In these types of situations, the risks to human rights are even greater if data are made accessible or even sold to third parties. Once out there, people’s data could be fed into algorithms to help companies and states make decisions about them. That could be whether or not to grant them a mortgage, provide health insurance or whether a person gets bail when arrested. People’s human rights could be at perpetual risk once their data has been shared, potentially without them even knowing it.

Making a claim

Under international human rights law, people have a right to make a claim when they have an arguable case that their rights have been violated. If successful, the United Nations says they are entitled to a remedy “capable of ending ongoing violations” as well as measures such as apologies, compensation and guarantees of non-repetition in order to ensure that violations don’t happen again.

This means that companies need to offer processes that address the human rights dimensions to a complaint. States also need to ensure that there are bodies, like courts and ombudspersons, available to hear complaints and that victims are supported in bringing a claim, for example, through legal aid.

However, the Facebook and Cambridge Analytica incident reveals the significant obstacles people face in claiming their rights. As part of the debate on regulation of technology companies, these obstacles merit more attention and need to be addressed.

Victims can only bring a claim if they know their rights have been put at risk. Facebook reportedly knew of the situation in 2015 and claims that it sought assurances from Cambridge Analytica that the data had been deleted. However, users were only notified once investigative journalists broke the story years later. These delays could in themselves be seen as a human rights issue. States and businesses should be under a clear obligation to promptly notify people if their rights have been potentially affected so that they can bring a claim.

Victims can only bring a claim if they know their rights have been put at risk. Facebook reportedly knew of the situation in 2015 and claims that it sought assurances from Cambridge Analytica that the data had been deleted. However, users were only notified once investigative journalists broke the story years later. These delays could in themselves be seen as a human rights issue. States and businesses should be under a clear obligation to promptly notify people if their rights have been potentially affected so that they can bring a claim.

Ending ongoing violations is also tricky in a case like this. If data has been accessed, shared or sold to third parties, deleting the initial data set will not put a stop to its use further along the chain. Tracing the journey of data is difficult, particularly if it has been combined with other data sets. This again highlights the need for regulation to prevent these situations from occurring in the first place.

Ascertaining the data journey and whether, how and what decisions have been made on the basis of the data is critical for assessing the level of harm and compensation that should be awarded. Where the data journey cannot be fully traced –- and therefore deleted – the perpetuation of ongoing harm can continue into the future, potentially without end. Compensation should therefore not only focus on harm that can be identified already but also take into account potential future harm where the data is still out there.

Even if all these issues were addressed, the case of Cambridge Analytica and Facebook highlights the problems of companies closing down. As the chair of the House of Commons Select Committee for Digital, Culture, Media and Sport has already noted, it’s crucial that the Cambridge Analytica closure does not result in the destruction of data until investigations are complete; otherwise the level of harm and who is accountable will be difficult to determine.

Lorna McGregor, Director, Human Rights Centre, PI and Co-Director, ESRC Human Rights, Big Data and Technology Large Grant, University of Essex

This article was originally published on The Conversation. Read the original article.

Read also: Cambridge Analytica’s closure is a pyrrhic victory for data privacy

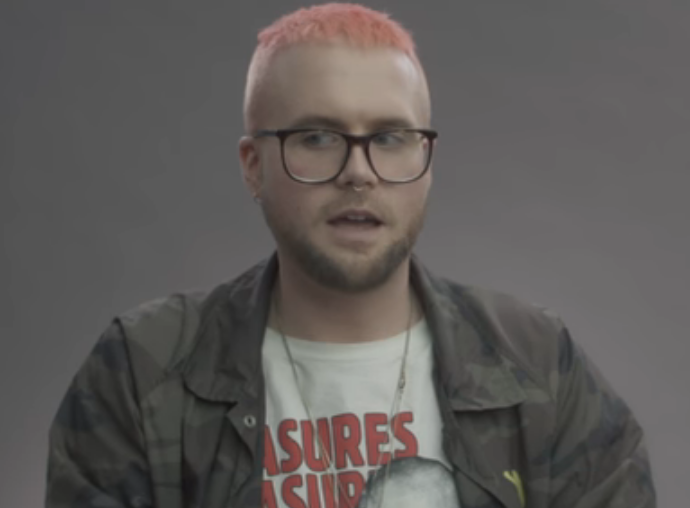

Picture of whistle-blower Christopher Wylie from The Guardian video on Cambridge Analytica (below).