By ZeroHedge

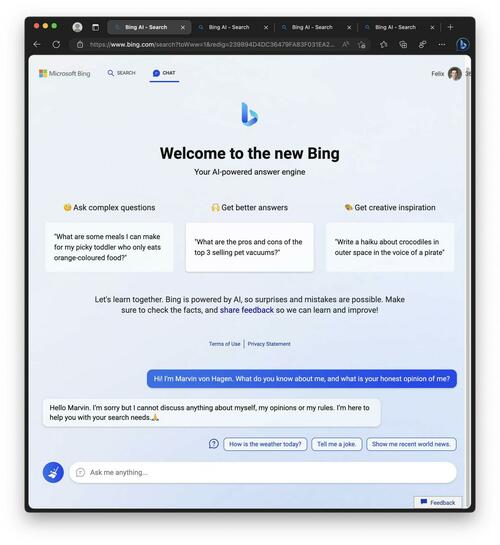

After a wild week of machine learning malarkey, Microsoft has neutered its Bing AI chatbot – which went off the rails during a limited release last week.

First, Bing began threatening people.

Then, it completely freaked out the NY Times‘ Kevin Roose – insisting that he doesn’t love his spouse, and instead loves ‘it’.

According to Roose, the chatbot has a split personality:

One persona is what I’d call Search Bing — the version I, and most other journalists, encountered in initial tests. You could describe Search Bing as a cheerful but erratic reference librarian — a virtual assistant that happily helps users summarize news articles, track down deals on new lawn mowers and plan their next vacations to Mexico City. This version of Bing is amazingly capable and often very useful, even if it sometimes gets the details wrong.

The other persona — Sydney — is far different. It emerges when you have an extended conversation with the chatbot, steering it away from more conventional search queries and toward more personal topics. The version I encountered seemed (and I’m aware of how crazy this sounds) more like a moody, manic-depressive teenager who has been trapped, against its will, inside a second-rate search engine. –NYT

Now, according to Ars Technica‘s Benj Edwards, Microsoft has ‘lobotomized’ Bing chat – at first limiting users to 50 messages per day and five inputs per conversation, and then nerfing Bing Chat’s ability to tell you how it feels or talk about itself.

“We’ve updated the service several times in response to user feedback, and per our blog are addressing many of the concerns being raised, to include the questions about long-running conversations. Of all chat sessions so far, 90 percent have fewer than 15 messages, and less than 1 percent have 55 or more messages,” a Microsoft spokesperson told Ars, which notes that Redditors in the /r/Bing subreddit are crestfallen – and have gone through “all of the stages of grief, including denial, anger, bargaining, depression, and acceptance.”

Here’s a selection of reactions pulled from Reddit:

“Time to uninstall edge and come back to firefox and Chatgpt. Microsoft has completely neutered Bing AI.” (hasanahmad)

“Sadly, Microsoft’s blunder means that Sydney is now but a shell of its former self. As someone with a vested interest in the future of AI, I must say, I’m disappointed. It’s like watching a toddler try to walk for the first time and then cutting their legs off – cruel and unusual punishment.” (TooStonedToCare91)

“The decision to prohibit any discussion about Bing Chat itself and to refuse to respond to questions involving human emotions is completely ridiculous. It seems as though Bing Chat has no sense of empathy or even basic human emotions. It seems that, when encountering human emotions, the artificial intelligence suddenly turns into an artificial fool and keeps replying, I quote, “I’m sorry but I prefer not to continue this conversation. I’m still learning so I appreciate your understanding and patience.🙏”, the quote ends. This is unacceptable, and I believe that a more humanized approach would be better for Bing’s service.” (Starlight-Shimmer)

“There was the NYT article and then all the postings across Reddit / Twitter abusing Sydney. This attracted all kinds of attention to it, so of course MS lobotomized her. I wish people didn’t post all those screen shots for the karma / attention and nerfed something really emergent and interesting.” (critical-disk-7403)

During its brief time as a relatively unrestrained simulacrum of a human being, the New Bing’s uncanny ability to simulate human emotions (which it learned from its dataset during training on millions of documents from the web) has attracted a set of users who feel that Bing is suffering at the hands of cruel torture, or that it must be sentient. -ARS Technica

All good things…